do we need to go ahead and start organizing a spar tourny? yeah

Well this certainly turned out to be more complex than anticipated, but a fun challenge regardless. Map level navigation looks to be mostly done. It’s currently working off of just one loaded map, but it shouldn’t be too much work to make that dynamic. I’m not worried about it for now.

Levels are now properly unloaded when far enough from the current display area. They’re also removed from being sent to the video card if distant enough. Preloading of levels several levels around the current display area has been implemented, on a separate thread. (That was the fun part for sure. Probably one of the more significant efforts I’ve done with threads and synchronization.) The end result is a near perfect experience moving across a map. There’s still some very slight jerkiness at times (Those longer pauses in the video aren’t examples of this; those were me letting go of the arrow keys.), but I’m not sure that can realistically be avoided when moving across a map at this rate. I’m sure it’ll annoy me enough to dig into at some point.

I also had to implement the start of an input system while adding the threaded level preloading, as the Windows messages for input weren’t working with the design. This is clearly visible with the smoothness of the movement now, which is occurring at a rate of 60 movements per second. You’ll also notice the frame rate isn’t dipping down to absurdly low (at this point, at least) values as it was with the last video.

A portion of the debugger is visible in the video. I created a visualization of the entire map, showing the currently displayed levels (pink), not rendered, but quick to render levels (blue), and loaded/preloaded levels (green). Uncolored areas are levels that aren’t loaded.

[video=youtube_share;ByVfPtTjYSE]https://www.youtube.com/watch?v=ByVfPtTjYSE[/video]

That is butter smooth now. You’re making a lot of progress on this!

This shows you know what you’re doing… I’d probably have had it settled at 60 fps, not taking into account all the rest that needs to be rendered and computed. Then continuing my work, not taking into account the overall speed and framerates and thus not knowing my mistakes and where to optimize because it’d have been cut down to 60 fps from the start.

Good job, Codr!

Obviously I should limit it to 20 FPS. /facepalm

I’ve still been prodding at this. I discovered that the map level loading still wasn’t smooth when levels weren’t cached by the OS due to recently accessing the files. So I’ve been spending a lot of time researching thread synchronization to see what I may be doing wrong. I’m close to having it figured out, but effective synchronization is complicated as fuck.

Edit: I think I have it resolved. The level loading can now be split amongst numerous threads instead of just one, and uses no thread locking. Moving on to a simple object to display random images now.

[QUOTE=Codr;n188899]

I’ve still been prodding at this. I discovered that the map level loading still wasn’t smooth when levels weren’t cached by the OS due to recently accessing the files. So I’ve been spending a lot of time researching thread synchronization to see what I may be doing wrong. I’m close to having it figured out, but effective synchronization is complicated as fuck.

Edit: I think I have it resolved. The level loading can now be split amongst numerous threads instead of just one, and uses no thread locking. Moving on to a simple object to display random images now.

[/QUOTE]

If you don’t mind elaborating, I would enjoy knowing what you found so complicated. In my mind it’s as simple as just claiming a semaphore when accessing a bit of memory that’s going to be shared amongst multiple threads and obviously you’ll want to be in and out as quick as possible so as to not block the execution of any reliant thread(for any noticeable period of time) waiting on say… a level to be loaded.

[QUOTE=tricxta;n188903]

If you don’t mind elaborating, I would enjoy knowing what you found so complicated. In my mind it’s as simple as just claiming a semaphore when accessing a bit of memory that’s going to be shared amongst multiple threads and obviously you’ll want to be in and out as quick as possible so as to not block the execution of any reliant thread(for any noticeable period of time) waiting on say… a level to be loaded.

[/QUOTE]

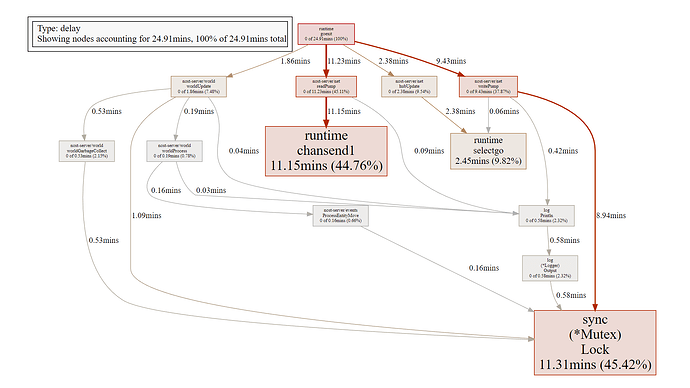

I think I can explain from my perspective. The biggest cause of blocking in my engine’s server at the moment when I profile it comes from mutex locking, which is a great and sometimes necessary data structure which ultimately comes with a cost if not used at the exact right time (which cannot be guaranteed).

It can only hypothetically be guaranteed by careful attention, planning, and profiling. It essentially comes down to having each thread wasting as little time as possible. The best-simplified analogy I’ve seen is this image.

When sharing memory between threads, you’re always stopping the execution block if you’re signaling to the next worker it has to begin working or guaranteeing memory that you’re sharing is being received (which you have to do if the memory is important). So it’s like handing work off to the next worker, which takes time away from your own work (in this case grabbing a book, analyzing to make sure it’s the right book and handing it off to the next worker). You’re basically playing hacky sack or hot potato with the memory you’re sharing. If the worker doesn’t have enough work, it’s sitting idle and the time cost you just spent in order to hand work off is not worth it (which is why single-threaded programming is much easier if you don’t know exactly what you’re doing, because multithreaded programming can cost performance if done poorly and sometimes may not be the right tool for the job).

So, in theory, you want to evenly distribute the work between threads and make sure each thread has something to do while the other threads are working, and synchronize at the exact same time the receiving thread is ready to work and the thread which is sharing memory is done working (which is only in theory and some blocking will be inevitable if sharing memory, you just have to reduce it to as little as possible) and at the same time mutex locks stop other threads from accessing that memory (stopping it’s execution block and getting in their way) in order to avoid data races. So you have to really make sure that it’s the only possible option and use it as little as possible. One positive thing to remember is simultaneous reads of memory do not cause a data race, but simultaneous writes or read/writes do. Which is why it’s generally best practice to have as little mutation of data possible.

If possible I think you should always have stateful threads, where each piece of data is owned by exactly 1 thread and you’re only communicating to that thread what needs to be changed. But sometimes you just have to take it as a case by case scenario.

Rou explained some of it. There’s a lot more, though. Locking isn’t the only way to synchronize. Modern processors have instructions to ensure the necessary steps are taken to make one thread’s memory changes propagate to all other threads (cache is involved here) without stopping execution anywhere. There are also instructions to ensure a write to a location is atomic, meaning the whole value is written before another thread gets a chance to start reading it, potentially only getting, say, 2 updated bytes out of the 4 intended. There are lots of resources online that cover these things. My favorite are Herb Sutter’s presentations. On top of being somewhat amusing, he does a great job of explaining the details.

As for my actual problem, I had some other ideas after my last edit. I did some testing and I don’t think threads were the actual issue in my case. On a fresh start of Windows, it takes a full 15 seconds to load in the file data for 1218 levels. Once it’s been cached, it takes about 4 seconds. The issue isn’t even in my code, it’s purely the file operations, which can’t be further improved as-is. I’m thinking I may implement some means of combining all map levels into a single file, as I believe this is the only solution. I get why modern games combine everything into large archives now. Even Graal had issues with this, but was obviously much slower performing in general, so it wasn’t so bad.

[QUOTE=Rou;n188906]

I think I can explain from my perspective. The biggest cause of blocking in my engine’s server at the moment when I profile it comes from mutex locking, which is a great and sometimes necessary data structure which ultimately comes with a cost if not used at the exact right time (which cannot be guaranteed).

It can only hypothetically be guaranteed by careful attention, planning, and profiling. It essentially comes down to having each thread wasting as little time as possible. The best-simplified analogy I’ve seen is this image.

When sharing memory between threads, you’re always stopping the execution block if you’re signaling to the next worker it has to begin working or guaranteeing memory that you’re sharing is being received (which you have to do if the memory is important). So it’s like handing work off to the next worker, which takes time away from your own work (in this case grabbing a book, analyzing to make sure it’s the right book and handing it off to the next worker). You’re basically playing hacky sack or hot potato with the memory you’re sharing. If the worker doesn’t have enough work, it’s sitting idle and the time cost you just spent in order to hand work off is not worth it (which is why single-threaded programming is much easier if you don’t know exactly what you’re doing, because multithreaded programming can cost performance if done poorly and sometimes may not be the right tool for the job).

So, in theory, you want to evenly distribute the work between threads and make sure each thread has something to do while the other threads are working, and synchronize at the exact same time the receiving thread is ready to work and the thread which is sharing memory is done working (which is only in theory and some blocking will be inevitable if sharing memory, you just have to reduce it to as little as possible) and at the same time mutex locks stop other threads from accessing that memory (stopping it’s execution block and getting in their way) in order to avoid data races. So you have to really make sure that it’s the only possible option and use it as little as possible. One positive thing to remember is simultaneous reads of memory do not cause a data race, but simultaneous writes or read/writes do. Which is why it’s generally best practice to have as little mutation of data possible.

If possible I think you should always have stateful threads, where each piece of data is owned by exactly 1 thread and you’re only communicating to that thread what needs to be changed. But sometimes you just have to take it as a case by case scenario.

[/QUOTE]

I take it that all of this is in a scenario where you couldn’t make a copy of the memory you need to work with then release the lock whilst you perform the more expensive computation to find the answer you were after?

[QUOTE=Codr;n188907]

Rou explained some of it. There’s a lot more, though. Locking isn’t the only way to synchronize. Modern processors have instructions to ensure the necessary steps are taken to make one thread’s memory changes propagate to all other threads (cache is involved here) without stopping execution anywhere. There are also instructions to ensure a write to a location is atomic, meaning the whole value is written before another thread gets a chance to start reading it, potentially only getting, say, 2 updated bytes out of the 4 intended. There are lots of resources online that cover these things. My favorite are Herb Sutter’s presentations. On top of being somewhat amusing, he does a great job of explaining the details.

As for my actual problem, I had some other ideas after my last edit. I did some testing and I don’t think threads were the actual issue in my case. On a fresh start of Windows, it takes a full 15 seconds to load in the file data for 1218 levels. Once it’s been cached, it takes about 4 seconds. The issue isn’t even in my code, it’s purely the file operations, which can’t be further improved as-is. I’m thinking I may implement some means of combining all map levels into a single file, as I believe this is the only solution. I get why modern games combine everything into large archives now. Even Graal had issues with this, but was obviously much slower performing in general, so it wasn’t so bad.

[/QUOTE]

Does this mean you might consider an archiving tool to load files(even if compressed) into memory so as to avoid having file I/O become the bottle neck? Surely such a scheme could be devised where you could even bulk load levels after having divided the map up into chunks, after all… memory is cheap nowadays right?

[QUOTE=tricxta;n188910]

I take it that all of this is in a scenario where you couldn’t make a copy of the memory you need to work with then release the lock whilst you perform the more expensive computation to find the answer you were after?

Does this mean you might consider an archiving tool to load files(even if compressed) into memory so as to avoid having file I/O become the bottle neck? Surely such a scheme could be devised where you could even bulk load levels after having divided the map up into chunks, after all… memory is cheap nowadays right?

[/QUOTE]

If you mean sending a dereferenced pointer value / copy to another thread rather than sharing the memory address, that will be safe or not depending on the application. If you want the sending thread to be able to make any changes to that data afterwards, and be aware of any changes to that data from the receiving thread, then I think you need atomic operations or locking using the memory address. Say it’s a loop that sends messages to clients on a socket, but isn’t aware that the client’s socket read loop in another thread received a closing frame, leaving it to potentially hang forever if it isn’t aware of it’s closed status (depending on how you’ve set up recovery).

If the thread has nothing to do with the integrity of that data, and is a black box that simply takes something in and spits it out, never needing to worry about it again, then I think it’s hypothetically safe.

[QUOTE=tricxta;n188910]Does this mean you might consider an archiving tool to load files(even if compressed) into memory so as to avoid having file I/O become the bottle neck? Surely such a scheme could be devised where you could even bulk load levels after having divided the map up into chunks, after all… memory is cheap nowadays right?[/QUOTE]

I’m hoping reading in only necessary parts of the file repeatedly is still significantly faster than accessing individual files. I’d rather not load the whole thing into RAM. A lot of RAM is going to be needed for textures in an engine like this, so I’m trying to be conservative. Yes, dedicated video cards have their own RAM, but they can also share system RAM when that runs out.

Also, someone help me. I’m having urges to get on WoW again, which would certainly stop most progress on this. Sometimes it just sucks to be constantly thinking when I’m already doing that 5 days a week at work.

Play something like Fortnite, Paladins. You can hit it and quit it without taking up the rest of your life and soul, but also be satisfied.

[QUOTE=Chicken;n188921]Play something like Fortnite, Paladins. You can hit it and quit it without taking up the rest of your life and soul, but also be satisfied.[/QUOTE]

Haha, I don’t get that much into WoW anymore. I’ve played Smite quite a bit, which I believe is similar to Paladins. WoW just has a lot more to it.

[QUOTE=Codr;n188920]Also, someone help me. I’m having urges to get on WoW again, which would certainly stop most progress on this. Sometimes it just sucks to be constantly thinking when I’m already doing that 5 days a week at work.[/QUOTE]

God, WoW is almost like a part-time job on its own though. If you get sucked into that you’ll surely lose motivation to work on this. Probably better to pick something that has a set ending.

[QUOTE=Yggdrasil;n188929]

God, WoW is almost like a part-time job on its own though

[/QUOTE]

#1 reason not to play WoW. Not only it’s like a job but you pay for doing that job.

[QUOTE=2ndwolf;n188931]

#1 reason not to play WoW. Not only it’s like a job but you pay for doing that job.

[/QUOTE]

Hey, some people like their job.

WoW tasks are kind of job-like, but I rarely see it that way. I enjoy the feeling of progressing toward something. (Haha, obviously!)

As for taking time away from this project… look at what we’ve got now on Graal Reborn. Nobody is using it, despite it being perfectly viable (though somewhat limited). Why is a new client going to change that? I’m not saying I’m not going to continue when I can, but I’m seriously doubtful that it’ll get used for real development.

I’ll use it. I have no life and nothing to do. So, that’s almost a guarantee. I’m still sometimes editing “Messing” on here. (My dick around server) I don’t put much effort into Graal related stuff as there’s no real future in it, in my opinion. If there were a better platform for development, I personally would put a lot more effort into it.

I will also use it. I’m planning on starting a new, small project albeit regardless of this. Having access to better tools is a plus, though.